相关概念

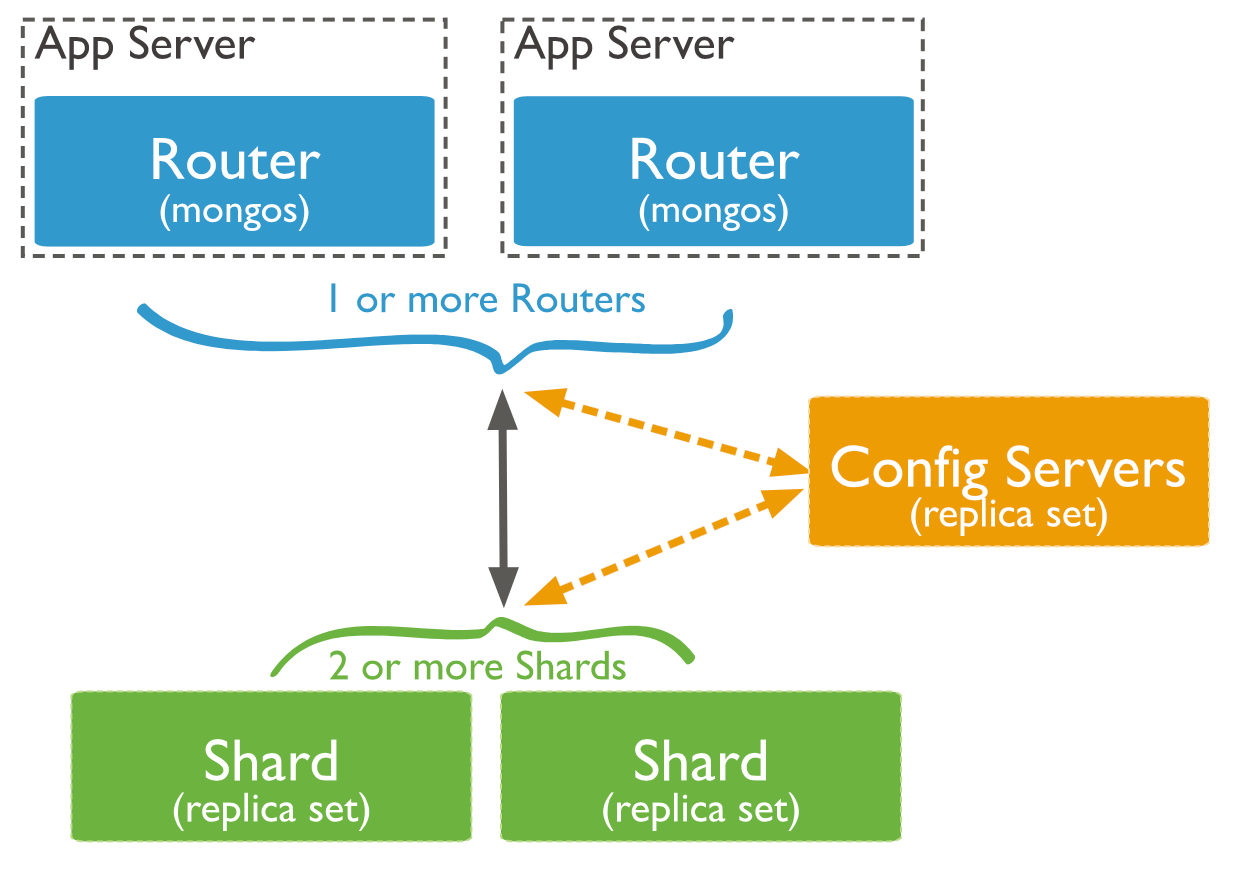

从图中可以看到有四个组件:mongos、config server、shard、replica set。

mongos,数据库集群请求的入口,所有的请求都通过mongos进行协调,不需要在应用程序添加一个路由选择器,mongos自己就是一个请求分发中心,它负责把对应的数据请求请求转发到对应的shard服务器上。在生产环境通常有多mongos作为请求的入口,防止其中一个挂掉所有的mongodb请求都没有办法操作。

config server,顾名思义为配置服务器,存储所有数据库元信息(路由、分片)的配置。mongos本身没有物理存储分片服务器和数据路由信息,只是缓存在内存里,配置服务器则实际存储这些数据。mongos第一次启动或者关掉重启就会从 config server 加载配置信息,以后如果配置服务器信息变化会通知到所有的 mongos 更新自己的状态,这样 mongos 就能继续准确路由。在生产环境通常有多个 config server 配置服务器,因为它存储了分片路由的元数据,防止数据丢失!

shard,分片(sharding)是指将数据库拆分,将其分散在不同的机器上的过程。将数据分散到不同的机器上,不需要功能强大的服务器就可以存储更多的数据和处理更大的负载。基本思想就是将集合切成小块,这些块分散到若干片里,每个片只负责总数据的一部分,最后通过一个均衡器来对各个分片进行均衡(数据迁移)。

replica set,中文翻译副本集,其实就是shard的备份,防止shard挂掉之后数据丢失。复制提供了数据的冗余备份,并在多个服务器上存储数据副本,提高了数据的可用性, 并可以保证数据的安全性。

仲裁者(Arbiter),是复制集中的一个MongoDB实例,它并不保存数据。仲裁节点使用最小的资源并且不要求硬件设备,不能将Arbiter部署在同一个数据集节点中,可以部署在其他应用服务器或者监视服务器中,也可部署在单独的虚拟机中。为了确保复制集中有奇数的投票成员(包括primary),需要添加仲裁节点做为投票,否则primary不能运行时不会自动切换primary。

简单了解之后,我们可以这样总结一下,应用请求mongos来操作mongodb的增删改查,配置服务器存储数据库元信息,并且和mongos做同步,数据最终存入在shard(分片)上,为了防止数据丢失同步在副本集中存储了一份,仲裁在数据存储到分片的时候决定存储到哪个节点。

系统系统 centos7

三台服务器:192.168.1.1/2/3

mongodb版本:3.2.8

服务器规划

| 服务器1 | 服务器2 | 服务器3 |

|---|---|---|

| mongos | mongos | mongos |

| config server | config server | config server |

| shard server1 主节点 | shard server1 副节点 | shard server1 仲裁 |

| shard server2 仲裁 | shard server2 主节点 | shard server2 副节点 |

| shard server3 副节点 | shard server3 仲裁 | shard server3 主节点 |

端口分配:

- mongos:27017

- config:10004

- shard1:10001

- shard2:10002

- shard3:10003

安装mongodb

选择《OneinStack》数据库安装mongodb,注意版本为3.2.8

- cat >> /etc/rc.local << EOF

- echo never > /sys/kernel/mm/transparent_hugepage/enabled

- echo never > /sys/kernel/mm/transparent_hugepage/defrag

- EOF

修改配置

三个节点都执行,如:192.168.1.1

- mkdir /usr/local/mongodb/data/{configsvr,mongos,shard1,shard2,shard3}

- mkdir /usr/local/mongodb/etc/keyfile

- >/usr/local/mongodb/etc/keyfile/linuxeye

/usr/local/mongodb/etc/shard1.conf

- systemLog:

- destination: file

- path: /usr/local/mongodb/log/shard1.log

- logAppend: true

- processManagement:

- fork: true

- pidFilePath: "/usr/local/mongodb/data/shard1/shard1.pid"

- net:

- port: 10001

- storage:

- dbPath: "/usr/local/mongodb/data/shard1"

- engine: wiredTiger

- journal:

- enabled: true

- directoryPerDB: true

- operationProfiling:

- slowOpThresholdMs: 10

- mode: "slowOp"

- #security:

- # keyFile: "/usr/local/mongodb/etc/keyfile/linuxeye"

- # clusterAuthMode: "keyFile"

- replication:

- oplogSizeMB: 50

- replSetName: "shard1_linuxeye"

- secondaryIndexPrefetch: "all"

/usr/local/mongodb/etc/shard2.conf

- systemLog:

- destination: file

- path: /usr/local/mongodb/log/shard2.log

- logAppend: true

- processManagement:

- fork: true

- pidFilePath: "/usr/local/mongodb/data/shard2/shard2.pid"

- net:

- port: 10002

- storage:

- dbPath: "/usr/local/mongodb/data/shard2"

- engine: wiredTiger

- journal:

- enabled: true

- directoryPerDB: true

- operationProfiling:

- slowOpThresholdMs: 10

- mode: "slowOp"

- #security:

- # keyFile: "/usr/local/mongodb/etc/keyfile/linuxeye"

- # clusterAuthMode: "keyFile"

- replication:

- oplogSizeMB: 50

- replSetName: "shard2_linuxeye"

- secondaryIndexPrefetch: "all"

/usr/local/mongodb/etc/shard3.conf

- systemLog:

- destination: file

- path: /usr/local/mongodb/log/shard3.log

- logAppend: true

- processManagement:

- fork: true

- pidFilePath: "/usr/local/mongodb/data/shard3/shard3.pid"

- net:

- port: 10003

- storage:

- dbPath: "/usr/local/mongodb/data/shard3"

- engine: wiredTiger

- journal:

- enabled: true

- directoryPerDB: true

- operationProfiling:

- slowOpThresholdMs: 10

- mode: "slowOp"

- #security:

- # keyFile: "/usr/local/mongodb/etc/keyfile/linuxeye"

- # clusterAuthMode: "keyFile"

- replication:

- oplogSizeMB: 50

- replSetName: "shard3_linuxeye"

- secondaryIndexPrefetch: "all"

/usr/local/mongodb/etc/configsvr.conf

- systemLog:

- destination: file

- path: /usr/local/mongodb/log/configsvr.log

- logAppend: true

- processManagement:

- fork: true

- pidFilePath: "/usr/local/mongodb/data/configsvr/configsvr.pid"

- net:

- port: 10004

- storage:

- dbPath: "/usr/local/mongodb/data/configsvr"

- engine: wiredTiger

- journal:

- enabled: true

- #security:

- # keyFile: "/usr/local/mongodb/etc/keyfile/linuxeye"

- # clusterAuthMode: "keyFile"

- sharding:

- clusterRole: configsvr

/usr/local/mongodb/etc/mongos.conf

- systemLog:

- destination: file

- path: /usr/local/mongodb/log/mongos.log

- logAppend: true

- processManagement:

- fork: true

- pidFilePath: /usr/local/mongodb/data/mongos/mongos.pid

- net:

- port: 27017

- sharding:

- configDB: 192.168.1.1:10004,192.168.1.2:10004,192.168.1.3:10004

- #security:

- # keyFile: "/usr/local/mongodb/etc/keyfile/linuxeye"

- # clusterAuthMode: "keyFile"

分别启动mongo

- /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/etc/shard1.conf

- /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/etc/shard2.conf

- /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/etc/shard3.conf

配置复制集

- /usr/local/mongodb/bin/mongo --port 10001

- use admin

- config = { _id:"shard1_linuxeye", members:[

- {_id:0,host:"192.168.1.1:10001"},

- {_id:1,host:"192.168.1.2:10001",arbiterOnly:true},

- {_id:2,host:"192.168.1.3:10001"}

- ]

- }

- rs.initiate(config)

- /usr/local/mongodb/bin/mongo --port 10002

- use admin

- config = { _id:"shard2_linuxeye", members:[

- {_id:0,host:"192.168.1.1:10002"},

- {_id:1,host:"192.168.1.2:10002"},

- {_id:2,host:"192.168.1.3:10002",arbiterOnly:true}

- ]

- }

- rs.initiate(config)

- /usr/local/mongodb/bin/mongo --port 10003

- use admin

- config = { _id:"shard3_linuxeye", members:[

- {_id:0,host:"192.168.1.1:10003",arbiterOnly:true},

- {_id:1,host:"192.168.1.2:10003"},

- {_id:2,host:"192.168.1.3:10003"}

- ]

- }

- rs.initiate(config)

注:以上是配置rs复制集,相关命令如:rs.status(),查看各个复制集的状况

启动三台机器上的configsvr和mongos节点

- /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/etc/configsvr.conf

再分别启动

- /usr/local/mongodb/bin/mongos -f /usr/local/mongodb/etc/mongos.conf

配置shard分片

在192.168.1.1机器上配置shard分片

- /usr/local/mongodb/bin/mongo --port 27017

- use admin

- db.runCommand({addshard:"shard1_linuxeye/192.168.1.1:10001,192.168.1.2:10001,192.168.1.3:10001"});

- db.runCommand({addshard:"shard2_linuxeye/192.168.1.1:10002,192.168.1.2:10002,192.168.1.3:10002"});

- db.runCommand({addshard:"shard3_linuxeye/192.168.1.1:10003,192.168.1.2:10003,192.168.1.3:10003"});

查看shard信息

- mongos> sh.status()

- --- Sharding Status ---

- sharding version: {

- "_id" : 1,

- "minCompatibleVersion" : 5,

- "currentVersion" : 6,

- "clusterId" : ObjectId("5a55af962f787566bce05b78")

- }

- shards:

- { "_id" : "shard1_linuxeye", "host" : "shard1_linuxeye/192.168.1.1:10001,192.168.1.2:10001" }

- { "_id" : "shard2_linuxeye", "host" : "shard2_linuxeye/192.168.1.1:10002,192.168.1.2:10002" }

- { "_id" : "shard3_linuxeye", "host" : "shard3_linuxeye/192.168.1.1:10003,192.168.1.2:10003" }

- active mongoses:

- "3.2.8" : 3

- balancer:

- Currently enabled: yes

- Currently running: no

- Failed balancer rounds in last 5 attempts: 0

- Migration Results for the last 24 hours:

- No recent migrations

- databases:

查看分片状态

- mongos> db.runCommand( {listshards : 1 } )

- {

- "shards" : [

- {

- "_id" : "shard1_linuxeye",

- "host" : "shard1_linuxeye/192.168.1.1:10001,192.168.1.2:10001"

- },

- {

- "_id" : "shard2_linuxeye",

- "host" : "shard2_linuxeye/192.168.1.1:10002,192.168.1.2:10002"

- },

- {

- "_id" : "shard3_linuxeye",

- "host" : "shard3_linuxeye/192.168.1.1:10003,192.168.1.2:10003"

- }

- ],

- "ok" : 1

- }

启用shard分片的库名字为'linuxeye',即为库

- use admin

- mongos> sh.enableSharding("linuxeye")

- { "ok" : 1 }

- db.runCommand({"enablesharding":"linuxeye"})

表分片:

- db.runCommand({shardcollection:'linuxeye.LiveAppMesssage',"key":{"_id":1}})

查看状态

- db.LiveAppMesssage.stats()

Thu Mar 1 09:28:08 CST 2018